UC Berkley's Professor Stuart J. Russell discusses the near- and far-future of artificial intelligence, including self-driving cars, killer robots, governance, and why he's worried that AI might destroy the world. How can scientists reconfigure AI systems so that humans will always be in control? How can we govern this emerging technology across borders? What can be done if autonomous weapons are deployed in 2020?

ALEX WOODSON: Welcome to Global Ethics Weekly. I'm Alex Woodson from Carnegie Council in New York City.

This week's podcast is with Dr. Stuart Russell, professor of computer science and Smith-Zadeh Professor in Engineering at University of California, Berkeley. Dr. Russell is the co-author of the hugely influential textbook Artificial Intelligence: A Modern Approach, which was first published in 1995. His latest book is Human Compatible: AI and the Problem of Control.

This interview was recorded a couple weeks ago after Dr. Russell's talk at the AAAI Conference on Artificial Intelligence here in New York. His lecture was titled "How Not to Destroy the World with AI." So we discussed that, the near and far future of the technology, and why he's calling for some major changes in how scientists design AI systems. We also spoke about self-driving cars and lethal autonomous weapons system, or killer robots.

For a lot more on AI, you can go to carnegiecouncil.org. If you're interested in killer robots, my last podcast is with The New School's Peter Asaro. He is one of the top voices on that subject and we talked about the risks, governance, and ethics of this emerging technology. I also recently spoke to IBM's Francesca Rossi, the general chair of AAAI 2020. We talked in-depth about the theories and philosophies behind AI decision-making.

But for now, here's my talk with Stuart Russell.

Dr. Russell, thanks for coming. I know it has been a very busy day for you.

STUART RUSSELL: It's a pleasure to be here.

ALEX WOODSON: You were speaking this morning at the AAAI Conference. What's new this year at AAAI? What are people talking about this year that maybe they haven't in the years before?

STUART RUSSELL: AI, as you may have heard, is a hot topic these days, so the attendance, the number of paper submissions, and so on has exploded over the last five or six years. Most of that has been to do with deep learning, basically building very large pattern-recognition systems and then finding ways to use them for computer vision, speech recognition, language processing, and various kinds of things.

That has been a little bit of a controversial topic within what we might call the "mainstream" AI community. It was always a popular topic within the neural network community—and they feel they're in the ascendency right now—but there is a big part of the AI community based on topics like representation and reasoning, and I think what we're seeing now is the beginnings of some kind of rapprochement between these two approaches. So how do we combine large-scale learning from data with knowledge and reasoning that I think everyone really understands a system has to have in order to be intelligent? There have been quite a few papers and talks on how you might go about that. I think that's a very good development.

ALEX WOODSON: As you said, AI is a very hot topic these days. I think you said in the last five or six years you've seen papers and conversations explode about this. Do you think that is to do with how the tech has evolved, or has society/culture evolved into a place where this is more of a conversation?

STUART RUSSELL: I think it's primarily the technology. AI has had its ups and downs. We had a very big boom in the mid-1980s, which fizzled out because the technology was not really up to scratch. So it's possible to have technical advances in AI which can generate a lot of press, but when you try to put them into practice in real-world applications, turn out not be technologically robust.

What seems to be happening with deep learning is that the range of applications is pretty broad where it works fairly well, but certainly it doesn't work everywhere. It tends to require a huge amount of data before it reaches a good level of predictive capability. Humans typically need one or two examples before we understand a concept.

For example, if you have a child and you want to teach him what a giraffe is, you show them a picture in a picture book and say, "This is a giraffe." You can't buy a picture book that has 2 million pictures of giraffes because humans just don't need that many examples to learn the concept. But right now machines do need thousands or millions of examples of visual concepts to learn.

There's no doubt in my mind that the current level of technology is not going to continue to improve in capabilities until it exceeds human intelligence unless we solve some of these major open problems: How does knowledge combine with learning? How do you make decisions over long timescales? How do you accumulate concepts over time and build concepts on top of concepts? There's a whole panoply of intellectual tools that human beings have that we just don't know how to make machines have them yet. So there's a lot of work to do between here and there.

ALEX WOODSON: From speaking with Francesca Rossi a few weeks ago—and we had Wendell Wallach here a couple of years ago—we already know that AI is embedded into our daily lives. As you said, there's a lot of work that needs to be done, and there's a lot of work that is being done. Looking forward five to ten years, where will we be with AI? How will the world be different because of AI?

STUART RUSSELL: I think there are two paths. One is, if you take everything we know how to do now, how is that going to roll out in terms of continued economic applications and so on? The other question is, what new capabilities are going to come online?

Roughly speaking, you could say the last decade was the decade where we figured out vision, how to recognize objects, how to track moving objects, and so on. I think this decade will be the decade where we figure out language. Again, it won't be perfect, but the ability to actually understand and extract content from language is an incredibly important part of billions of jobs that people do, and just our daily lives.

If you think about the smart speaker that's on your kitchen table or the digital assistant that's in your cellphone, it's okay at translating your question into a search engine query and giving you back the results, but you can't really have a conversation with it, and it doesn't understand what you're doing, who your children are, where they go to school, or what happens when one of your children breaks their arm at school and what are you supposed to do, and so on. So there's a whole bunch of stuff that machines simply don't understand because they don't understand language. They can't read your emails or listen to your conversations and extract an understanding of what's going on.

If that changes, then I think we would see AI systems playing a much more integrated role in our daily lives. We would start to rely on the intelligent system on your cellphone as a CEO relies on their executive assistant to manage their calendar and their lives and make sure that everything works. We will start to have this kind of help, which is good. I think a lot of us need help in managing our complicated lives.

I think another thing that is likely to be happening is that self-driving cars will move from being prototypes into being real products. That will probably be the most visible implementation of AI so far because it will have a huge impact on how cities work and how our whole transportation system works.

Another possibility is that AI systems will move into weapons. That would also be a very high visibility application of AI. I'm hoping it's not a reality because I don't want my field to be associated with killing people as its primary function, but the way things are going, both with the technology and the progress of international diplomacy, it looks likely that nations will start deploying fully autonomous weapons. In fact, Turkey has already announced that they will be deploying a fully autonomous weapon this year against the Kurds in Syria.

ALEX WOODSON: That's scary. I want to get to autonomous weapons. I talked to Peter Asaro a couple of weeks ago about that. As you said, that could be an incredibly huge development.

I want to speak a little bit about self-driving cars first, though, because as you said that is going to be visible. That's going to be something that affects all of us probably. Just to start this conversation, how close are we to that becoming a reality? And what really needs to happen for someone like myself to go out and buy a self-driving car and have it take me around?

STUART RUSSELL: I think what many people don't realize is that the first self-driving car was demonstrated on the freeway in 1987. Since then we have been working on, shall we say, increasing the number of nines. Reliability engineers talk about five nines or six nines or eight nines. What that means is, it's 99.999 percent reliable or 99.999999 percent reliable. If you think about a human driver, let's say a taxi driver who works eight hours a day for ten years, that's 100 million seconds of driving and in any one of those seconds you can make a serious mistake. But a good professional taxi driver shouldn't have a serious accident in those 100 million seconds of driving. So that means they are eight-nines reliable. If you are six-nines reliable, which sounds pretty impressive—99.999999 percent reliable—you're still going to die about once a month.

It's no good being able to demonstrate one successful run. You need to be able to demonstrate that you have probably nine-nines. You need to be 10 times as reliable as a professional human driver before we're going to accept the technology. And we're not close to that yet. Some of the leading groups like Waymo are getting closer to that but only in relatively restricted circumstances—good weather, daytime, well-marked roads. So nighttime driving, in the rain, in—

ALEX WOODSON: New York City.

STUART RUSSELL: I was thinking more like Cairo or Jakarta, somewhere where the driving is a little more fluid and traffic lights are at best suggestive. I think we're nowhere close to that level of capability.

I think two things have to happen. One is that, in order to deploy any current technology or anything that's in the near future, we will have to have ways of circumscribing when it can and when it cannot be used so that people are not tempted to use it in circumstances where it's too dangerous.

The other thing is, how do we make it more reliable? What Waymo is doing is the right approach. In order to make it reliable you have to be able to handle the unexpected circumstance because that's the case that you get wrong, and those happen often enough that you can't afford to have that error rate.

So trying to build systems that you train on lots and lots of training data and hoping that that's enough, that if you just get it to behave like the human driver in all the training set cases, everything's going to be fine; I don't think that's going to work. A human driver can deal with unexpected circumstances for two reasons: One is, they know what they want. They're trying to get to the destination, but they're not trying to kill people or run over dogs and cats or whatever it might be, so they understand what does and does not count as successful driving.

And they understand, as it were, the physics of the world. They know that if I push on this, I go forward; if I push on that, I go backward. A system that is just trained from input/output examples doesn't know any of that. It doesn't know that people don't like to be dead, it doesn't know that pressing on this accelerator makes the car go forward. So it has a really hard time dealing with an unexpected circumstance that isn't in the training data.

We've seen this over and over again in many, many other applications. The right solution is the one that we have adopted for things like chess programs, where you're always getting into unexpected situations. You're almost never playing a game that has been played before. Pretty soon, after a few moves, you get to a position that has never been seen before, and you have to figure out what to do. The way you do that is to look ahead, to say: "Well, these are the things I could do. That one leads to an undesirable situation, and that one leads to a good situation." That's what you have to do with driving. That's what Waymo is doing and some of the other leading groups, and I think that's the right approach.

ALEX WOODSON: Are you confident that we will get to a place where we know how to govern this technology when self-driving cars are a big part of society, or do we still have a lot of work to do in that respect?

STUART RUSSELL: The governance question is a good one because it has taken us a long time, and we're still working just on the governance of ordinary cars and ordinary people. The early response to the car was, "Oh, it's way too dangerous, and we have to have people walking in front of it with a flag." To some extent that was right. The death rates per mile traveled were frankly horrifying in the early days of motoring, and we would never allow that to happen now.

It's only because of much better brakes, much better steering, and much better training of drivers, and the modern assists like braking systems and collision-warning systems and so on. So things are gradually getting better, plus the laws about speed and turn signals and so on. So lots of stuff gradually accumulated over the decades to try to make this a safer system.

We're going to have the same process with self-driving cars. There may be some countries that over-regulate and some countries that under-regulate. At the moment it looks a little bit like under-regulation because companies are kind of experimenting on the general public with technology that is a little bit immature right now. I would say the motivation is a good one, which is to try as quickly as possible to get to a point where you can deploy this life-saving technology. I think 1.2 million people a year are killed in car accidents, so if you could cut that by a factor of 10, that would be a great thing to do. But the question is, is it fair to be experimenting on the general public without their consent and killing a few of them along the way?

ALEX WOODSON: I guess another issue that could arise from self-driving cars is putting a lot of people out of work as well, cab drivers, truck drivers, and that type of thing.

STUART RUSSELL: Typically governments have stayed out of that, the creative destruction of capitalism just goes on. But this could be very sudden. I think it's already an issue. Trucking companies are having a hard time recruiting people into this career because people see that it doesn't have a long life as a career.

ALEX WOODSON: I want to move into lethal autonomous weapons now. As I said, I spoke with Peter Asaro a couple of weeks ago about that, and he is very much against them. I asked him how we can stop this proliferation, and he said: "That's easy. Just ban them." As you said a few minutes ago, Turkey has developed this technology, and you think that they might use it against the Kurds later this year.

STUART RUSSELL: That's what I read in the press that the government has announced. They have a drone called the Kargu. The videos—some of them are simulated, some of them are real—show a quad copter about as big as a football, and it can carry I think a 1.1 kg explosive payload, which is way more than enough to kill people. The manufacturer advertises it as having human-tracking capability, face-recognition capability, and autonomous-hit capability. I don't know how else you spell it, but that sounds like an autonomous weapon to me. The government said they would be deploying them in Syria in early 2020, which is now.

ALEX WOODSON: I assume from your comments that you're against this technology, or against this specifically?

STUART RUSSELL: I think there are a number of reasons why autonomous weapons are problematic. There are also reasons why they might have some advantages. From my point of view, the main reason not to allow the development of autonomous weapons is that, because they're autonomous, they are what we call "scaleable." In computer science, this idea of scaleability is in some ways our raison d'être. With computers, if you can do it once, you can do it a billion times for not much more money. You can do that because the computer can manage that process by itself, it doesn't need a human to press the add button each time. You say, "For one to a billion, add these up," and it just adds them up.

It's the same thing with killing. If you can do the killing without the human pressing the button each time, then you can scale it up to kill literally a billion people if you could find them all. That form of weapon of mass destruction is something that would be extremely destabilizing because it's just very tempting to want to use it. Compared to nuclear weapons it has lots of advantages: It doesn't leave a huge radioactive crater or create fallout, you can pick off just the people you want to get rid of so you can occupy a territory safely, etc. So if you're in the business of genocide, ethnic cleansing, or tyranny, then this is your weapon. We have enough trouble with the weapons of mass destruction we already have. Why would we want to create a new and potentially much more dangerous, much cheaper, much easier-to-proliferate class of weapons, I don't know.

ALEX WOODSON: If this weapon is deployed in Syria later this year, what should the reaction be? I was talking to Peter about this, and he is meeting with different organizations at the UN level. As a scientist and researcher in this field, what could people like you do if this technology gets deployed? You're obviously against it for many good reasons. How can you react to that?

STUART RUSSELL: I think as a community we could get together and denounce it. As you mentioned, I was just at AAAI this morning. We—meaning AI researchers who are opposed to autonomous weapons—have been trying actually for quite a few years to get AAAI to have a policy position on this matter.

Interestingly, it's in the charter of AAAI. The legal document that set up the organization says that we should promote the "beneficial use" of artificial intelligence. Clearly this is not promoting the beneficial use of artificial intelligence, and so we should be taking a policy position, but it has been very difficult to get the organization to take any steps in that direction.

We have separate organizations, like the Campaign to Stop Killer Robots, which are working on this. We have had open letters. The vast majority of leading AI researchers have signed the open letter opposing autonomous weapons. We had meetings in the White House in the previous administration, presentations at the United Nations, meetings with ambassadors and governments, and so on.

The diplomatic process currently goes through Geneva, the Convention on Certain Conventional Weapons (CCW)—that's the short name for it—and one of the things that people suggest is a consensus-based diplomatic process. That means it's extremely difficult to get anything done when there are countries that don't want something to happen. Right now, the United States, the United Kingdom, and Russia are opposing a treaty, as are Israel, South Korea, and Australia. I think those are the main countries opposed to a treaty. So it's hard to move anything.

It might be that we have to go through the media, through the populations, the people of the Earth who don't want to be subject to this technology, pressuring their governments to do something, and that means going through the General Assembly instead of Geneva. That's a possibility.

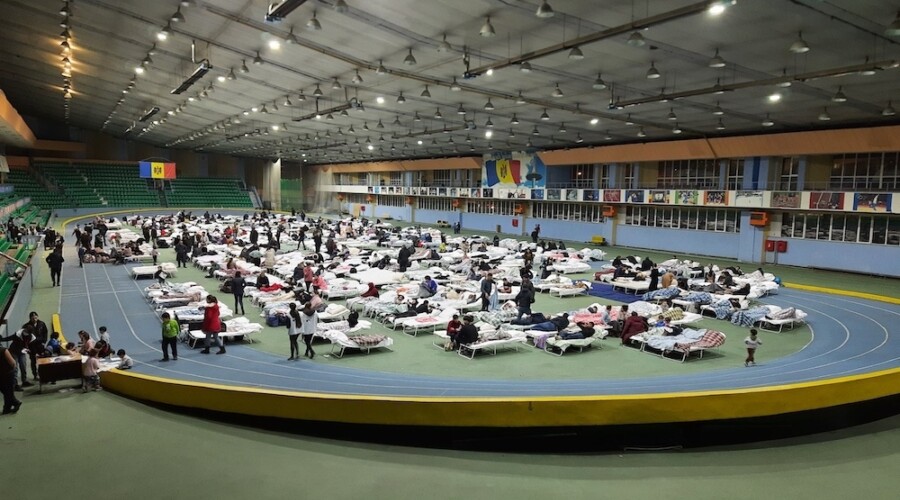

But if you think about what happened when one small Syrian boy—who had been trying to escape and had drowned—was found dead on a beach in the Mediterranean, that photograph basically changed European policy toward refugees and maybe set off a huge chain of events. Now imagine that in Turkey that little boy is running along the street, and a drone is chasing him and blows his head off. How is that going to play with the world's populations? How are people going to respond to seeing that happen? I suspect that the repercussions could be quite big.

ALEX WOODSON: I want to look a little further into the future, and I think lethal autonomous weapons can be part of that conversation as well. The title of your talk this morning at AAAI was "How Not to Destroy the World with AI." Are lethal autonomous weapons—looking far into the future where the world could possibly be destroyed—your biggest concern with AI, or is that just one of them?

STUART RUSSELL: Actually, to the extent possible, I would like to keep that as a separate concern because the problem with autonomous weapons is not that they would be too smart and would take over the world and destroy the human race. It's just that we would make billions of them and kill loads of people with them.

They don't have to be very smart. It's unlikely that you would imbue an autonomous weapon with the mental capability to take over the world. It just doesn't make sense. Generally people want weapons to do what they're told and nothing more. There is a connection, which is obviously that if there are available vast fleets of autonomous weapons, then if you were an AI system that was disembodied, it would be convenient to be able to take over those weapons and carry out things in the physical world, so it could be co-opted by a sufficiently intelligent system for their own ends.

My main concern is that we are investing hundreds of billions of dollars in creating a technology that we have no idea how to control. No one so far has come up with even a plausible story about how a less intelligent species, namely us, could control a more intelligent set of entities, these purported machines that we're trying to create, that could control those machines forever.

So what I'm trying to do is understand where things go wrong. Why do we lose control? Why do all these stories end up with conflict between humans and machines? The reason seems to be that we have been thinking about AI in the wrong way all along. We have been thinking about AI in a very simple-minded way that we create these machines that are very good at achieving objectives, and then we put those objectives into the machine, and then they achieve them for us.

That sounds great until you realize that our whole history is full of these cautionary tales of how that story goes wrong, starting with King Midas. He put the objective into the machine—in this case it was the gods—"I want everything I touch to turn to gold," and he got exactly what he asked for, and then he dies in misery and starvation. You've got the genie who gives you three wishes, and the third wish is always, "Please undo the first two wishes because I made a big mess of things."

That way of doing things, which I would call the standard model, of creating machines that optimize objectives that are supplied directly by the human, isn't going to work in the long run. We have sort of been okay until recently because our machines have mostly been in the lab, they have mostly been doing things like playing chess, and they have mostly been pretty stupid.

Our chess programs, for example, would never think of bribing the referee or hypnotizing the opponent because they're too stupid, they're not that smart. But if they were smarter, they would think of things to do other than playing moves on the board that would improve their chances of winning.

The approach that I am proposing is actually to get rid of that standard model, to say that's just a poor engineering approach, and it's a poor engineering approach because the more we succeed at it the worse it gets. The smarter the machine, the worse the outcome if it's not pursuing the right objective, and we don't know how to specify the objective correctly.

Instead, we should design machines that know that they don't know what the objective is, that humans may have preferences about the future, but the machine knows it doesn't know what they are and then acts accordingly. When you formulate the problem that way, the obligation on the machine is going to be to find out more about what we really want, but also to act in a way that is unlikely to violate the preferences that we might have about the world that it doesn't know about so it will behave cautiously—minimally invasive behavior if you want to think of it that way. It will ask permission before doing anything that might violate some preference that it doesn't know about, and it will allow itself to be switched off.

We will want to switch it off if it's about to do something harmful. It doesn't want to do anything harmful—it doesn't know what that means, but it doesn't want to do anything harmful—and so it should allow us to switch it off, whereas under the old model a machine with a fixed objective would never allow itself to be switched off because then it would fail.

So I believe that this approach to building AI systems is clearly a better one from the point of view of us being able to retain control over machines. Whether it's the final solution or whether we need additional thought, I don't know, but it's a step in the right direction.

ALEX WOODSON: Would this change the way that researchers working on AI right now have to approach their work?

STUART RUSSELL: Yes.

ALEX WOODSON: So I would imagine there has probably been some tension when you bring up this idea.

STUART RUSSELL: I have to say I have not heard any pushback that holds together. There's a natural resistance to change—"There must be some reason why what you're saying isn't right"—but I'm not aware of anyone putting forward any coherent objection that's sustainable.

But I think it's a reasonable question to say: "Well, that's great, but until that kind of technology exists we're going to have keep using the old kind." So it's incumbent on us to instantiate this new approach in the particular technical areas that people need.

A simple example would be machine learning, where you learn to recognize objects and images, which is one of the big things that has happened in the last decade. How does that work right now? Basically what you do is you fix an objective, which is typically how well your system classifies a set of training examples. You build a data set of a million photographs with labels, and then you train an algorithm to fit that training set as well as possible. What does "as well as possible" mean? Typically, what it has meant in all these systems is it makes the fewest number of mistakes.

But then you realize, Okay, well, are all mistakes equal? And the answer is no, clearly not, and Google found this out because they did it in practice. If you classify a human being as a gorilla, that's a really bad mistake. It was a huge public relations disaster for Google when they did that as well as being very upsetting to people. That was a really expensive mistake, whereas classifying one type of terrier as another type of terrier is not that serious. But you stated the objective in the form that all errors are equal, so you made a mistake in stating the objective, and you pay the price.

Then the question is, Well, how should we do this differently? The costs of misclassification are actually unknown. There are about 20,000 visual categories of object that people use, and any one of those could be classified as one of the other categories, so that's 400 million different types of errors that you could make. So there are really 400 million numbers to describe the costs of misclassification, and no one has ever written them down. We literally do not know what they are.

We can make some broad-brush guesses: Classifying a human as anything but a human is probably pretty bad, mixing up two kinds of cars or two kinds of dogs is not that bad. But the machine clearly should be operating with uncertainty about what the costs of misclassification are, and that technology doesn't exist. We don't have machine learning algorithms that have uncertainty about what we call the "loss function," which means the cost of making errors.

You could build these kinds of algorithms, and they would behave very differently. They would, for example, refuse to classify some images. They would say: "Well, this is too dangerous. I have some inkling about what it might be, but I can see that this might be very high cost if I made an error," or maybe I need to know more about the costs of making this kind of error, so I go back to the human designer or user and say: "Which is worse, classifying a dog as a cat or an apple as an orange" or whatever it might be?" So you would have a much more interactive kind of learning system rather than we just dump data in and get a classifier out. This kind of technology can be developed, and the sooner we do it the better.

ALEX WOODSON: In watching some of your presentations—and I believe you said a version of this here—you say, "You have to ask yourself, what if we succeed?" It sounds like you have taken that with AI: "What if we succeed?" and you've looked far into the future and the results aren't good. With this new process that you're describing, looking at that far into the future, optimistically what if we succeed? What will be the benefits to humans in the near future or the far future if this new type of AI really works?

STUART RUSSELL: In some ways it can be the golden age for humanity. Just from a technological point of view—these goals I think are shared across the AI community—you can eliminate the need for dirty, dull, dangerous work of a kind that didn't used to exist. I'm not saying the hunter-gatherer life was all roses, but it didn't involve going into a building and doing the same thing 10,000 times a day and doing the same day 10,000 times until you retire and die. That was not part of hunter-gatherer existence. Hunter-gatherer existence was varied, you acquired a lot of different skills, you cooperated closely with other people, and so on.

If you were writing science fiction 10,000 years ago, and you said, "You know, in the future we're going to go into these buildings and we're going to do the same thing 10,000 times a day until we die," you'd say: "That's ridiculous! Who would ever choose a future like that? That's never going to happen. Go and write another novel." But in fact that's what happened. That time in history is going away.

There are multiple major questions that this brings up. One is, what is everyone going to do? Another one is, how do we maintain our vitality as a civilization and as individuals when the incentive to do so has gone away? We go to school. We learn a lot to a large extent because it's necessary for our civilization. Otherwise, within a generation our civilization would collapse.

But that's no longer true because in a generation our civilization wouldn't collapse if all of the skills needed to run it are in the machines. This story—you can see it in WALL-E, E. M. Forster wrote a story in 1909 called "The Machine Stops." When machines are looking after everything, the incentive for humans to know, understand, and learn goes away, and then you have a problem. And it goes back in mythology—the lotus-eaters and all the rest of it. So how we deal with that is a major question. That's obviously at the extreme end of what job is everyone going to do, but it's a continuum with that issue.

Assuming that we avoid these pitfalls—of enfeeblement, another big pitfall is misuse, where people use AI for nefarious ends in ways that are catastrophic—the question we face is really, what is the future that we want? Until recently we never had a choice about the future. We were all trying to not die, trying to find enough to eat, trying to just keep stuff together. We never really got to choose a future. Assuming we have this kind of AI technology, then we could choose the future, and what would it be like? That's a difficult question.

I have actually been looking at writers who try to project a positive future with superintelligent AI, and there are a few, but they still have real trouble with the question of what humans are for in this picture. In many of those novels the humans kind of feel useless. Even if everything else is going well, the writers still haven't figured out how the humans avoid feeling useless.

ALEX WOODSON: What are those novels? I asked Wendell Wallach a similar question about that a year ago.

STUART RUSSELL: Probably the one that several people pointed me to is the series called the Culture novels by Iain Banks. That's sort of fairly well-fleshed-out through a series of novels. The Culture is a future pan-human, pan-galactic civilization where we have AI systems that are far, far more intellectually capable than us.

There's one scene where there's a human pilot on a military spaceship that gets into a battle, and basically the AI system that is in charge of the spaceship says: "Okay, we're going to have a battle now, so I'm going to encase you in foam so that you don't get shaken up too much. Okay. It's over." So in the space of three-eights of a second the entire battle has happened, and thousands of spaceships have been destroyed, Then the spaceship basically does a slow-motion replay for the humans so they can see what happened, and you really get a sense of the humans being a bit frustrated because they didn't really get to participate.

ALEX WOODSON: It sounds better than AI destroying the world, at least.

STUART RUSSELL: Yes. That's true. You could argue if overreliance on AI is in the long term debilitating for humans, then an AI system that understands enough about human preferences will obviously know that, and then the AI system would say, more or less, "Tie your own shoelaces."

That leaves human beings a little bit in the role of children. We tell our children to tie their own shoelaces so that they acquire independence and skills and autonomy. Is that a role that we want the human race to have? Is there any other way for it to be besides the AI systems, in some sense, becoming our parents: that they understand us much better than we do, they understand how the future is going to unfold, and they want to leave us with some autonomy so that we grow? That's a question.

The other question is, are we going to allow it? Because we are somewhat shortsighted, we will always say, "No, no, tie my shoelaces." So we may try to design out of AI systems the ability to stand back and let humans grow, and that would probably be a bad idea in the long run.

ALEX WOODSON: Dr. Russell, thank you so much.

STUART RUSSELL: My pleasure.