How can we ensure that the technologies currently being developed are used for the common good, rather than for the benefit of a select few?

When ChatGPT was asked what advances in artificial intelligence mean for the human condition, it responded to our inquiry that AI will "change the way people view their own abilities and skills and alter their sense of self." It could "impact people's sense of identity and purpose." It could "change the way people form and maintain relationships and impact their sense of community and belonging." The progress made in 2022 by generative AI, which includes large language models such as ChatGPT and image generators such as Dall-E, is awe-inspiring. When it eloquently warns us about its own potential impact on the human sense of self, purpose, and belonging—we are naturally impressed. But large language models can only give back to us what we have fed to them. Through statistical associative techniques and reinforcement learning, the algorithm behind ChatGPT can ferret out and regurgitate insightful words and concepts, but there is no sense in which it understands the depth of meaning these words and concepts carry.

Joseph Weizenbaum, considered a pioneer in computational sciences and the creator of the 1965 conversational computer program ELIZA that confounded some users into thinking it was human, cautioned against "magical thinking" about technology's potential to improve the human condition. When we have unrealistically high expectations about a technology solving problems, we can forget to think deeply about its limitations and potential negative impacts. Weizenbaum later said that he was deeply concerned that those engaging with ELIZA did not fully understand that they were interacting with a computer, or the inherent tendency of humans to become uncritically reliant on technology. He perceived this as a moral failing in a society catering to reductionist narratives.

Hannah Arendt, the trailblazing political theorist, argued in her 1958 book The Human Condition, that certain experiences—which she calls labor, work, and action—give humans their sense of identity and dignity. Undermining that dignity is a grave mistake. In the current AI discourse we perceive a widespread failure to appreciate why it is so important to champion human dignity. There is risk of creating a world in which meaning and value are stripped from human life.

In 2023, we need to have an intellectually honest debate about both the value and limits of the AI systems that are rapidly being embedded in our everyday lives, and about how we understand ourselves in interaction with them.

Social media platforms provide a cautionary example. While initially lauded for their potential to bring us together, the many and harmful downsides have become so deeply entrenched in how we and society as a whole operate, it is hard to think of ways to uproot the weaponization of truth and "othering" that cleaves societies and people apart. Can social media platforms ever deliver on the promise they once held or was this too just an illusion?

We might, in a few years, look back and lament how generative AI had the same destructive impact on education as social media has had on truth. Students are submitting output from ChatGPT without editing it, and educators are scrambling to come up with assignments that ChatGPT cannot be used to circumvent. Even though these language models are a far cry from any form of artificial general intelligence with common-sense reasoning and the capacity to understand what it is doing, their importance is undeniable.

But the output from generative AI models still falls far short of representing collective intelligence, let alone wisdom. Therefore, in 2023, we must seek to develop a new paradigm that facilitates collaborative problem-solving, integrating the best of machine intelligence with human wisdom. The incentive structure for AI research and the deployment of applications should be redirected away from technology that replaces human work toward applications that augment and expand collective and collaborative intelligence. For this to materialize we need to ask ourselves: What does a complete and comprehensive reset look like and how might it be enabled?

Over the past few years, the AI ethics discourse has revolved around two questions we have touched on already. How will AI change what it means to be human? And how can we manage the tradeoffs between the ways in which AI improves and worsens the human condition?

These questions are tangled up with a third question that does not receive adequate attention: Who wins and who loses when systems built around AI are deployed in every sector of the economy? The current revolution of data and algorithms is redistributing power in a way that cannot be compared to any previous historical shift. Incentive structures direct the deployment of AI for applications that exacerbate structural inequalities: The aim is typically to replace human work, rather than enhance human well-being.

In past industrial revolutions, machinery has also replaced human labor but productivity gains did not all accrue to owners of capital—those gains were shared with labor through better jobs and wages. Today, for every job that is automated all productivity gains go to the owners of capital. In other words, as AI systems narrow the range of work that only humans can do, the productivity gains are accruing only to the owners of the systems, those of us with stocks and other financial instruments. And as we all know well, the development of AI is largely controlled by an oligopoly of tech leaders with inordinate power in dictating its societal impact and our collective future.

Furthermore, AI applications are increasingly being developed to track and manipulate humans, whether for commercial, political, or military purposes, by all means available—including deception. Are we at risk of becoming the robots AI is designed to manipulate?

We need to begin thinking about AI comprehensively, not in a piecemeal manner. How can we ensure that the technologies currently being developed are used for the common good, rather than for the benefit of a select few? How can we incentivize businesses to deploy generative AI models in ways that equip employees with deeper and highly valuable skills, instead of making them superfluous? What kind of tax structure will disincentivize replacing human workers? What public policies and indexes are needed to calculate and redistribute goods and services to those adversely affected by technological unemployment and environmental impact? What limits should be placed on AI being used to manipulate human behavior?

Addressing questions like these requires breaking what the sociologist Pierre Bourdieu and anthropologist, journalist and author Gillian Tett call "social silences"— when a concept is widely considered taboo, or not openly discussed. In AI, social silences surround potential risks and negative impacts, from job displacement to erosion of privacy and to the exclusion of diverse voices from discussions about the development and deployment of AI systems. Attention to AI ethics is a start, but to date it has had limited impact on the design of new tools being developed and deployed.

When risks are not widely discussed, measures cannot be taken to mitigate them. Social silences can perpetuate inequalities, undermine empathy between different groups, and contribute to social conflict. When diverse perspectives are not represented in discussions about technologies, the technologies cannot be effectively challenged before—or even after—they are embedded in society.

The issue of social silences speaks to a deeper question: Who decides what we talk about in relation to AI, and what we do not? The development of AI is not just about the technology itself—the narratives we use to discuss AI also matter. The dominant narratives are rooted in "scientism," a belief that systems that assume human intelligence can be reduced to physics and recreated from the bottom up. Scientism functions as a theology that skews the way we understand what technologies can and cannot do. It either ignores the role humans can and should play in directing the evolution of technology, or it surrenders that role to those who have the most to gain from a culture dominated by those possibilities AI affords.

In 2023, we need to have an intellectually honest debate about both the value and limits of the AI systems that are rapidly being embedded in our everyday lives, and about how we understand ourselves in interaction with them.

The aforementioned Hannah Arendt famously talked about the "banality of evil"—the way ordinary individuals can be leveraged and complicit in acts of wrongdoing without fully realizing the consequences of their actions.

With this in mind, those who work in the research, development, and deployment of AI systems are now the front line of defense against potentially pernicious and imperiling AI applications. Most legislators may not even understand systems such as ChatGPT. Even if they did, they would be compromised in their approach by the perceived political imperative to champion innovations that promise to advance economic productivity and national competitiveness.

As a result, generative AI models such as the ChatGPT and many others, are effectively ungoverned except for select, yet somewhat flimsy industry guidelines, standards, and codes of practice. And we worry about "governance invisibility cloaks," whereby guidelines are written and implemented in ways that ostensibly address ethical problems but in reality obfuscates them and allows them to be ignored or bypassed.

On a positive note, however, we have been gratified in 2022 to see a growing interest in ethics as a way to navigate AI's inherent dilemmas. We see engagement, for example, at conferences from scientists and engineers who ten years ago would have been dismissive. There is growing appreciation of what it means to practically embed ethics in AI applications. There is a growing realization that the whole model in place is askew, and is leading us toward a future that distorts fundamental values and undermines human dignity.

There is a need for a reset!

Ethics is a language that can facilitate this reset. Ethics helps us deal with uncertainty when we do not, for example, have all the information we need to guide decisions or cannot predict the consequences of various course of action. It helps us to identify good questions we need to ask to remain humble and navigate gaps in our understanding, existing and emerging tension points, and tradeoffs inherent in differing options and choices.

We can perhaps be criticized for emphasizing the undesired societal impacts of AI. To be sure, the manifold benefits of AI are apparent. But does the upside of AI systems truly justify the massive and growing array of downsides?

Robert Oppenheimer, the head of the Manhattan Project that developed the atomic bombs that ended the WWII campaign against the Japanese, once said: “It is not possible to be a scientist unless you believe that the knowledge of the world, and the power which this gives, is a thing which is of intrinsic value to humanity, and that you are using it to help in the spread of knowledge, and are willing to take the consequences.”

The negative impacts can of course be defused, but only if given adequate attention, and only through the will to do so. Whatever we decide to do next, it is imperative that developers and public decision-makers alike are "ready to take the consequences." In the words of Anil Seth, the neuroscientist and author: "We ought to be concerned not just about the power that new forms of artificial intelligence are gaining over us, but also about whether and when we need to take an ethical stance towards them."

AI has the potential to bring us together, to address environmental challenges, to improve public health, and improve industrial efficiency and productivity. Yet it is also increasingly used to cement and sustain divisions, and to exacerbate existing forms of inequity. Prioritizing transparency and accountability is insufficient. There is indeed a need to take an ethical stance.

While we may be marveling at advances AI brings, for effective technology governance to truly materialize, a systemic reset directed at improving the human condition is required. AI and the techno-social system supporting it must not oppress, exploit, demean or manipulate us or the environment. This is not merely a challenge for governance, it is a question of recognizing the social and political rights as well as the dignity of every human life.

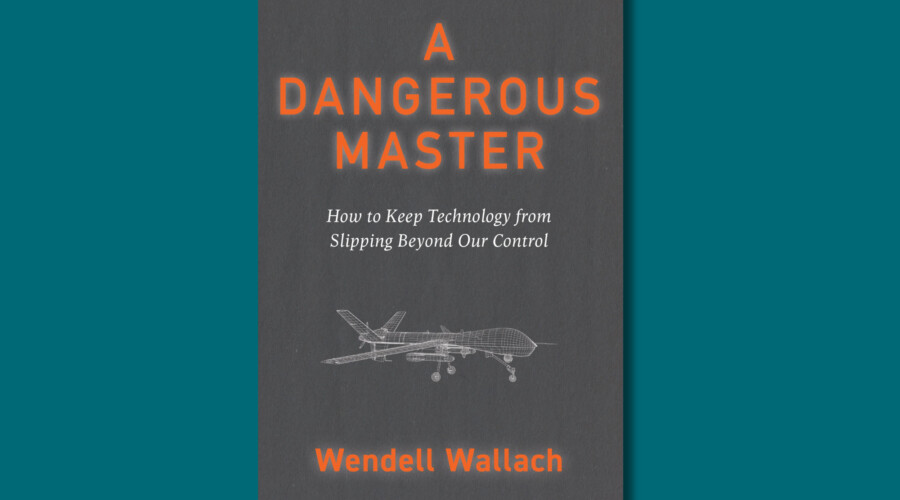

Anja Kaspersen is a Senior Fellow and Wendell Wallach is a Carnegie-Uehiro Fellow at Carnegie Council for Ethics in International Affairs, where they co-direct the Artificial Intelligence & Equality Initiative (AIEI).

Carnegie Council for Ethics in International Affairs is an independent and nonpartisan nonprofit. The views expressed within this article are those of the speakers and do not necessarily reflect the position of Carnegie Council.